Questions CIOs need to answer before committing to Generative AI

Unlocking the potential of artificial intelligence (AI) is a top priority for many forward-thinking organizations. And one area that has been gaining significant attention in recent years is generative AI. This revolutionary technology holds the promise of creating new and unique content, from art and music to writing and design. But before diving headfirst into the world of generative AI, CIOs (Chief Information Officers) should consider several important questions. How can they ensure success with this powerful tool? Is it right for their business? Below are the key questions and insights into how CIOs can make informed decisions about adopting generative AI within their organizations.

- Understand your business needs: Before implementing generative AI, CIOs must have a clear understanding of their organization’s specific goals and challenges. What specific business problem or opportunity will generative AI address? CIOs should clearly define the use case or application for generative AI within their organization. This will help determine if generative AI is the right solution and ensure it provides tangible value. By identifying the areas where generative AI can make a tangible impact, CIOs can ensure that its implementation aligns with strategic objectives.

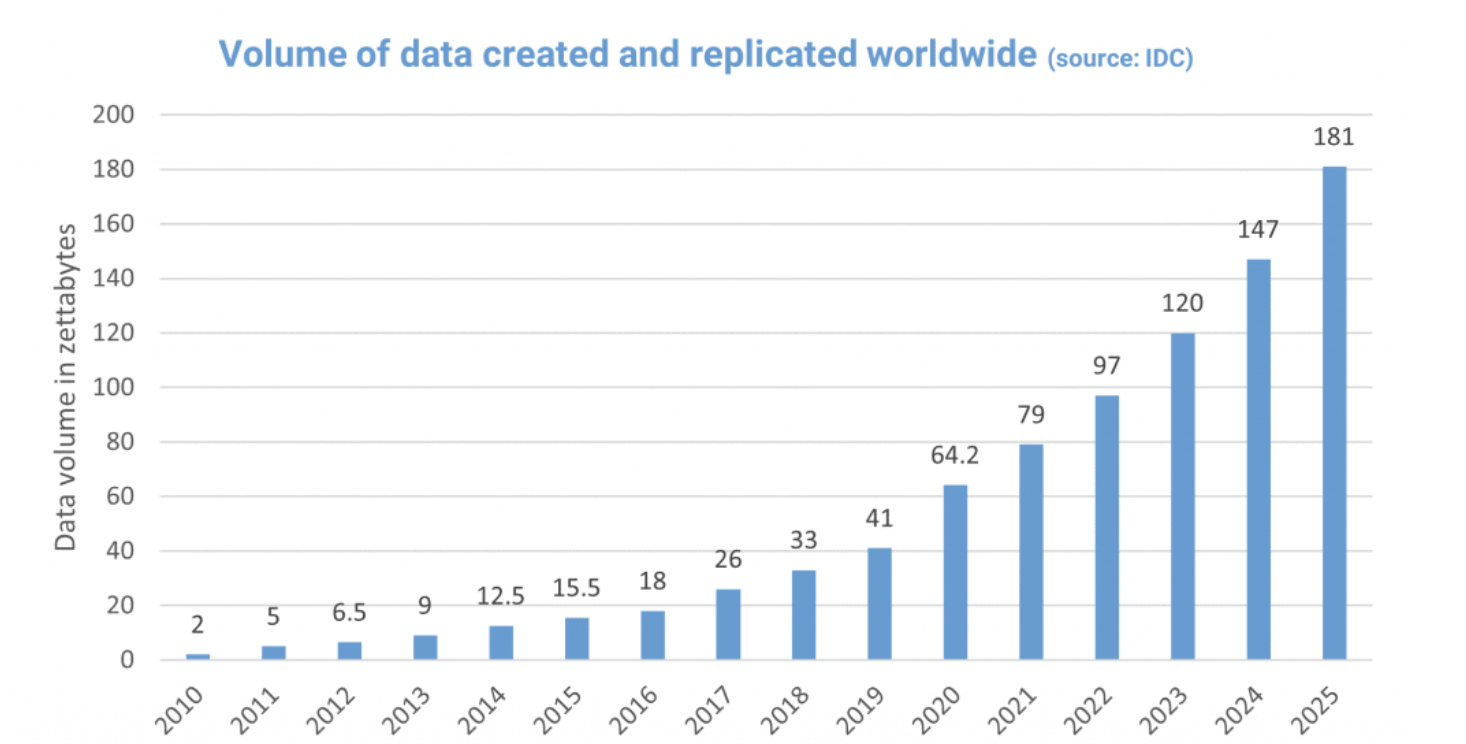

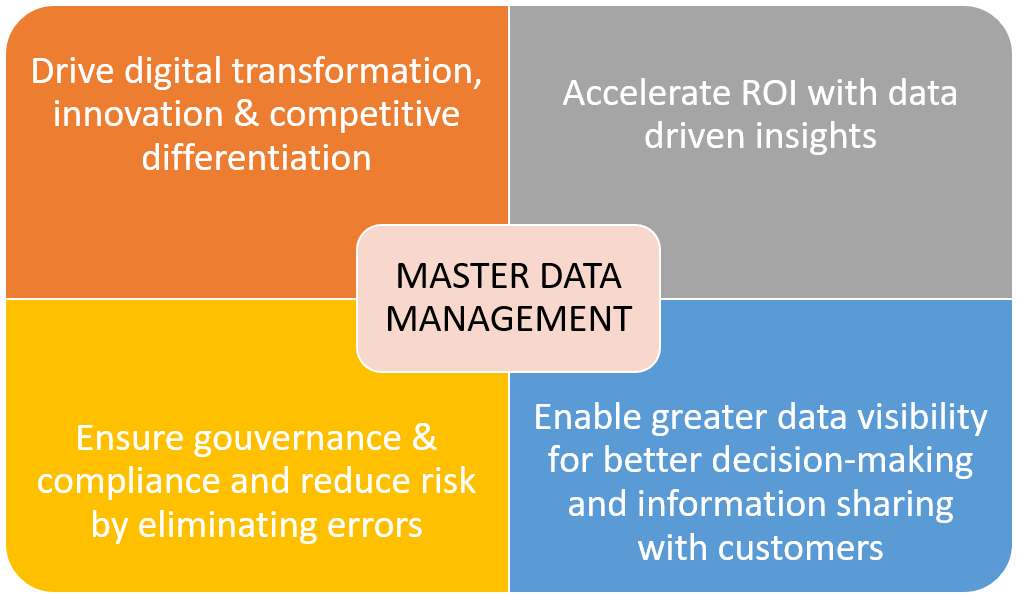

- What data is required for generative AI? Generative AI models typically require large amounts of high-quality data to learn and generate meaningful outputs. Ensuring access to high-quality datasets is crucial for achieving successful outcomes with generative AI applications. CIOs should identify the data sources available within their organization and assess if they meet the requirements for training and deploying generative AI models. Also, they should work closely with data scientists and domain experts to curate relevant and diverse datasets that reflect the desired output goals.

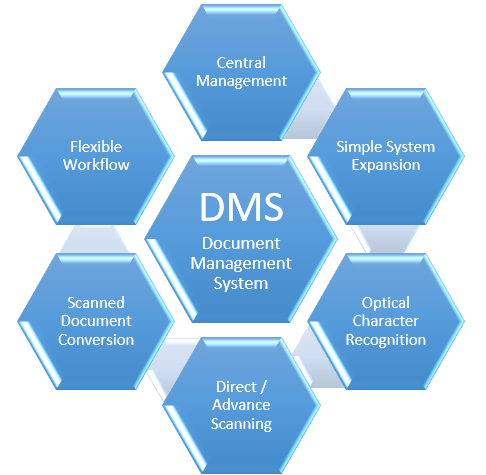

- Choose the right tool and platform. Not all generative AI solutions are created equal. CIOs must carefully evaluate different tools and platforms to find one that best suits their business requirements. Factors such as ease of use, scalability, customization options, and integration capabilities should be considered before making a decision.

- Required expertise and resources: Implementing generative AI may require specialized skills and expertise in areas such as machine learning, data science, and computational infrastructure. CIOs should evaluate if their organization has the necessary talent and resources to develop, deploy, and maintain generative AI systems effectively. Also, generative AI should not replace human creativity but rather augment it. Encouraging cross-functional collaboration between employees and machine learning models can lead to innovative solutions that blend the best of both worlds.

- Continuously monitor performance: Monitoring the performance of generative AI systems is essential for maintaining quality output over time. Implementing robust monitoring mechanisms will help identify any anomalies or biases in generated content promptly.

- How will generative AI be integrated with existing systems and technologies? CIOs should consider how generative AI will interface with their organization’s current IT infrastructure and whether any modifications or integrations are necessary. Compatibility with existing systems and technologies is crucial for seamless adoption.

How to determine if generative AI is right for your business

Determining whether generative AI is the right fit for your business requires careful consideration and evaluation. Here are a few key factors to consider before making a decision:

- Business Objectives: Start by assessing your company’s goals and objectives. What specific challenges or opportunities could be addressed through the use of generative AI? Consider how this technology can align with your long-term vision and help drive innovation.

- Data Availability: Generative AI relies on large datasets to learn patterns, generate content, or make predictions. Evaluate whether you have access to sufficient high-quality data that can fuel the algorithms behind generative AI models.

- Industry Relevance: Analyze how relevant generative AI is within your industry sector. Research existing use cases and success stories in similar industries to gain insights into potential benefits and risks associated with implementation.

- Resource Investment: Implementing generative AI may require significant investment in terms of time, budget, infrastructure, and skilled personnel. Assess if your organization has the necessary resources available or if acquiring them would be feasible.

- Ethical Considerations: Generative AI raises ethical concerns regarding privacy, bias, fairness, accountability, and transparency aspects since it involves creating synthetic content autonomously using trained models based on real-world data. Evaluate these considerations thoroughly before committing to generative AI solutions. Compliance with data protection, intellectual property, and other applicable laws is essential.

- Risk Assessment: Conduct a risk assessment to evaluate potential drawbacks such as model limitations, security vulnerabilities, compliance issues or reputational risks that might arise from adopting generative AI technologies.

By evaluating these factors thoughtfully and engaging stakeholders across different areas of expertise within your organization along with external consultants when needed; you will be better positioned to determine if generative AI is suitable for driving innovation in support of achieving your business objectives.

In today’s rapidly evolving technological landscape, the potential of generative AI cannot be ignored. It holds immense promise for transforming industries by unlocking new levels of creativity and innovation. The key lies in understanding your specific business needs before committing fully to this technology. So ask yourself: How can your organization benefit from generating artificial intelligence? And what are the potential risks and challenges that need to be addressed?