Bigdata and Analytics Revolution in Transport and Logistics Industry

The transport and logistics sector plays a crucial role in the global economy, ensuring goods are delivered efficiently from one place to another. With the advancement of technology, big data has become an indispensable tool in optimizing operations and improving decision-making processes within this industry.

Big data and analytics help organizations optimize their operations, enhance efficiency, improve customer service, and make data-driven decisions. From delivering goods to managing complex supply chains, every aspect of this sector requires precision & efficiency. And that’s precisely where big data and analytics step in as game-changers.

Here are some key roles and applications of big data and analytics in transport and logistics:

- Route Optimization: Big data analytics can analyze historical traffic data, weather conditions, and other relevant information to optimize delivery routes. This helps reduce fuel consumption, minimize delivery times, and lower operational costs. Also, by enabling real-time tracking and monitoring, vast amounts can be collected of data from various sources like GPS devices, sensors, and RFID tags. This way companies can have a detailed view of their supply chain network. This allows them to track shipments, monitor vehicle performance, analyze traffic patterns, and make informed decisions on route optimization.

- Demand Forecasting & Inventory Management: By analyzing customer preferences and historical sales data along with other variables such as seasonality or promotional activities, market trends, businesses can accurately forecast future demand for products or services. This helps optimize inventory management strategies by ensuring adequate stock levels while avoiding overstocking or stockouts.

- Predictive Maintenance: Sensors and data analytics can monitor the condition of vehicles and equipment in real-time. Predictive maintenance algorithms can predict when maintenance is needed. This helps in identifying and resolving issues quickly and enhancing overall operational efficiency.

- Customer Experience: Analyzing customer data and feedback can help logistics companies tailor their services to meet customer needs better. This can lead to improved customer satisfaction and loyalty. Additionally, through sentiment analysis techniques applied on social media platforms or customer feedback surveys, companies gain insights into customers’ needs and expectations. These insights allow them to tailor their services accordingly which ultimately leads to higher customer satisfaction rates.

- Risk Management: Data analytics can assess and mitigate risks associated with supply chain disruptions, such as natural disasters or geopolitical events. Companies can develop contingency plans and make informed decisions to minimize disruptions.

- Cost Reduction: By analyzing operational data, logistics companies can identify areas where costs can be reduced, such as optimizing warehouse layouts, improving vehicle routing, and streamlining processes.

- Regulatory Compliance: Big data analytics can help ensure compliance with various regulations, such as emissions standards, safety regulations, and customs requirements, by tracking and reporting relevant data.

- Sustainability: Analyzing data related to fuel consumption and emissions can help logistics companies reduce their environmental impact and meet sustainability goals.

- Market Intelligence: Data analytics can provide valuable insights into market trends, competitor activities, and customer preferences, helping logistics companies make strategic decisions and stay competitive.

- Capacity Planning: By analyzing data on shipping volumes and resource utilization, logistics companies can plan for future capacity needs, whether it involves expanding their fleet or warehouse space.

Challenges of Using Big Data in Transport and Logistics

- Data Integration: One of the major challenges in using big data in transport & logistics is integrating various sources of data. The industry generates massive amounts of information from multiple channels, such as GPS trackers, sensors, weather forecasts, and customer feedback. However, this data often exists in different formats and systems, making it difficult to integrate and analyze effectively.

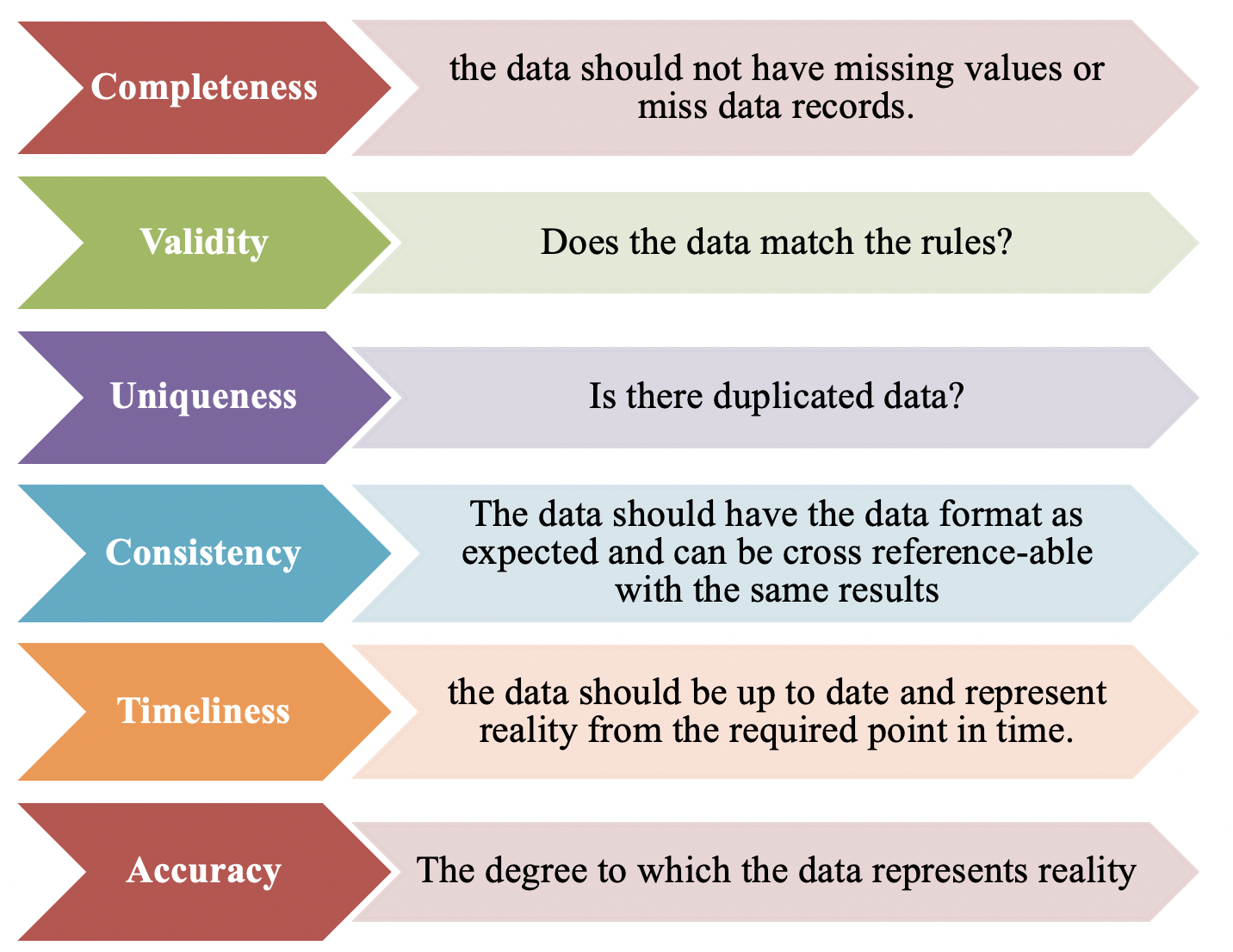

- Data Quality: Ensuring the accuracy and reliability of the collected data poses another challenge. With numerous variables involved in transportation operations, there is a risk of incomplete or inconsistent data sets that can lead to misleading insights or flawed decision-making.

- Privacy Concerns: As big data analytics rely on collecting vast amounts of personal information about individuals’ movements and behaviors, privacy concerns arise within the transport and logistics sector. Companies must adhere to strict regulations regarding consent, storage security, anonymization techniques, and user rights protection.

- Scalability Issues: Dealing with large volumes of real-time streaming data requires robust infrastructure capable of handling high velocity processing. Scaling up existing systems to accommodate increasing volumes can be complex and costly for organizations.

- Skilled Workforce: Building a competent team with expertise in big data analytics is crucial but challenging due to its niche nature. Finding professionals who possess both technical skills (data mining techniques) as well as domain knowledge (transportation operations) may prove difficult.

- Technology Adoption: Embracing new technologies like IoT devices or cloud computing for effective collection and analysis presents implementation challenges for traditional transportation companies that may have outdated infrastructure or resistance to change.

- Data Security: Protecting sensitive information from unauthorized access remains a critical concern when dealing with big datasets containing valuable business intelligence that could be exploited if not adequately protected.

Addressing these challenges requires collaboration between stakeholders to develop innovative solutions tailored specifically for the transport industry’s unique needs.

In summary, big data and analytics are transforming the transport and logistics industry by providing valuable insights, optimizing operations, reducing costs, improving customer service, and helping companies stay competitive in a rapidly changing environment. This data-driven approach is becoming increasingly essential for success in the industry.