The role of AI-driven Forecast Models in Business Operations

In today’s fast-paced business landscape, organizations are constantly seeking innovative ways to optimize their operations and unlock hidden sources of value. Artificial Intelligence (AI) has emerged as a game-changer, revolutionizing various industries with its data-driven insights & predictive capabilities. AI-driven forecast models, in particular, have the potential to transform how businesses make decisions and operate efficiently. In this article, we will explore the power of AI-driven forecast models and their impact on enhancing operational efficiency and value creation.

What are AI-driven forecast models?

AI-driven forecast models are advanced analytical tools that leverage machine learning algorithms and data analysis techniques to predict future outcomes based on historical data patterns. These models can process vast amounts of structured and unstructured data, learning from historical trends and making accurate predictions about future events.

The Role of AI in Operations

AI plays a crucial role in transforming traditional operational processes. By analyzing complex datasets at unparalleled speeds, AI-driven forecast models empower businesses to make well-informed decisions promptly. They enable organizations to proactively address challenges and opportunities, thereby optimizing various aspects of their operations.

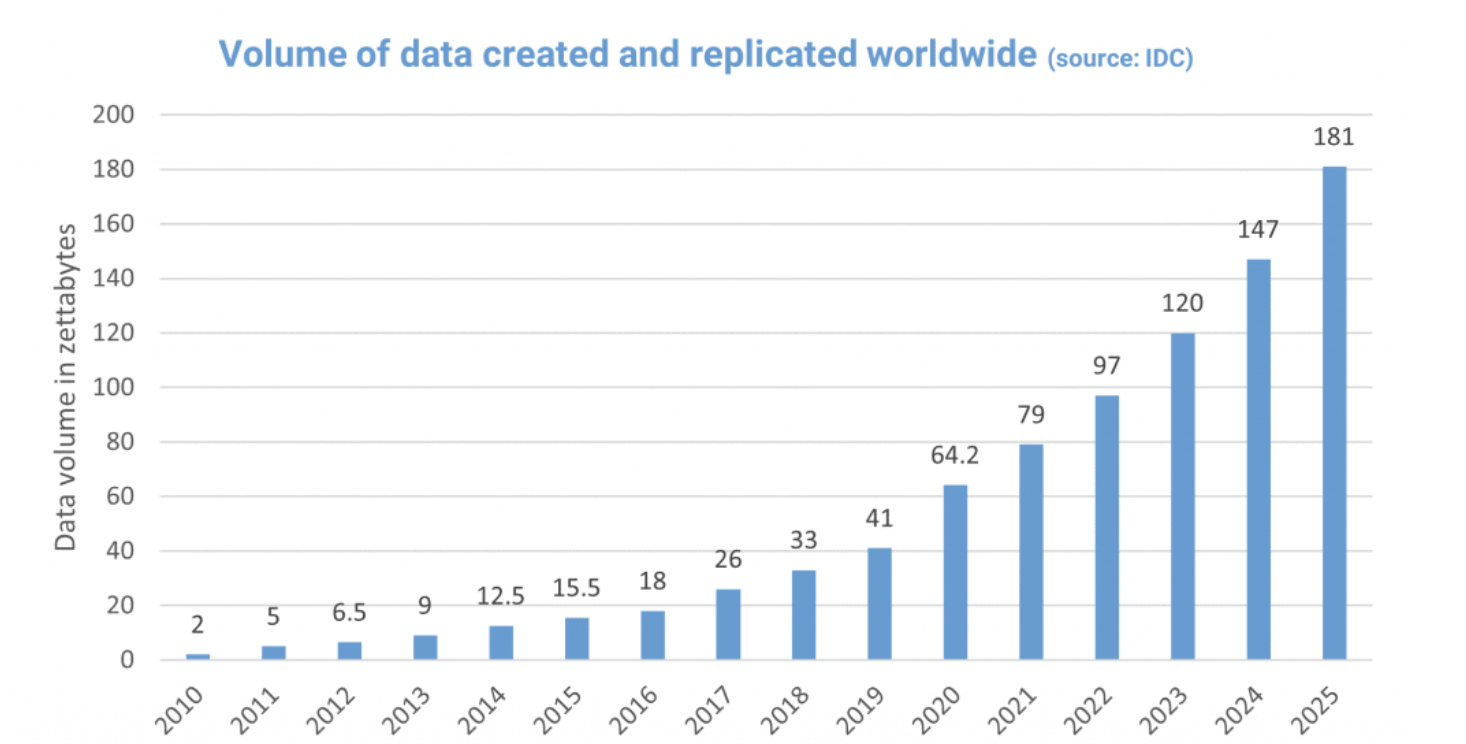

- Extracting insights from vast data: One of the primary advantages of AI-driven forecast models is their ability to process and analyze vast amounts of data from multiple sources. Businesses can gain valuable insights from this data, allowing them to identify patterns, trends, and correlations that were previously hidden or too complex to discover using conventional methods.

- Improving accuracy and reducing errors: AI-driven forecast models boast exceptional accuracy levels when predicting future outcomes. By minimizing human intervention, these models eliminate the risk of human errors and biases, providing reliable and consistent forecasts. Organizations can rely on these predictions to make better decisions and allocate resources more effectively.

- Allocating resources effectively: Resource allocation is a critical aspect of operational management. AI-driven forecast models can help organizations optimize resource allocation by analyzing historical data and predicting demand patterns. This enables businesses to allocate their resources efficiently, ensuring that they meet customer demands while minimizing waste and unnecessary costs.

- Inventory management and supply chain optimization: AI-driven forecast models revolutionize inventory management by predicting demand fluctuations and inventory needs accurately. With this information, businesses can streamline their supply chains, reducing inventory holding costs and avoiding stockouts or overstock situations.

- Predicting customer preferences: Understanding customer behavior is vital for businesses to tailor their products and services to meet customers’ preferences effectively. AI-driven forecast models analyze customer data and behavior to predict trends and preferences, helping organizations stay ahead of the competition and retain their customer base.

- Anticipating market trends: In a dynamic marketplace, predicting market trends is crucial for business survival and growth. AI-driven forecast models leverage historical data and market indicators to anticipate upcoming trends, enabling organizations to respond proactively to changing market conditions and gain a competitive advantage.

- Real-time monitoring and detection: AI-driven forecast models facilitate real-time monitoring of operations, enabling organizations to identify inefficiencies promptly. With instant alerts and insights, businesses can take immediate corrective actions, preventing potential disruptions and enhancing operational efficiency.

- Implementing corrective actions: By pinpointing operational inefficiencies, AI-driven forecast models guide organizations in implementing targeted corrective actions. Whether it’s optimizing production processes or improving customer service, these models provide valuable recommendations to enhance overall operational performance.

- Streamlining processes with AI: AI-driven forecast models can streamline complex processes within an organization, reducing manual intervention and associated time delays. By automating repetitive tasks, businesses can free up resources and focus on strategic decision-making, driving efficiency and productivity.

- Automating repetitive tasks: AI automation streamlines routine tasks, enabling employees to concentrate on high-value activities that require human creativity and problem-solving skills. Automation also minimizes the risk of errors, leading to increased productivity and cost savings for businesses.

The Future of AI-Driven Forecast Models

- Advancements and potential applications: As AI technology continues to evolve, so will AI-driven forecast models. Advancements in machine learning algorithms, computing power, and data availability will unlock new possibilities for forecasting accuracy and expand the range of applications across industries.

- Ethical considerations in AI adoption: As AI-driven forecast models become more ubiquitous, ethical considerations become critical. Organizations must adhere to ethical guidelines and principles to ensure responsible AI deployment, safeguarding against potential negative impacts on society and the workforce.

Conclusion

AI-driven forecast models are a transformative force in today’s business landscape. By leveraging vast amounts of data and powerful algorithms, these models enable businesses to optimize operations, enhance decision-making, and unlock multiple sources of value. As organizations embrace AI’s potential, they must also address challenges related to data privacy, bias, and ethical considerations to harness the true power of AI-driven forecast models.