GDPR with CIAM: THE devil is in the details

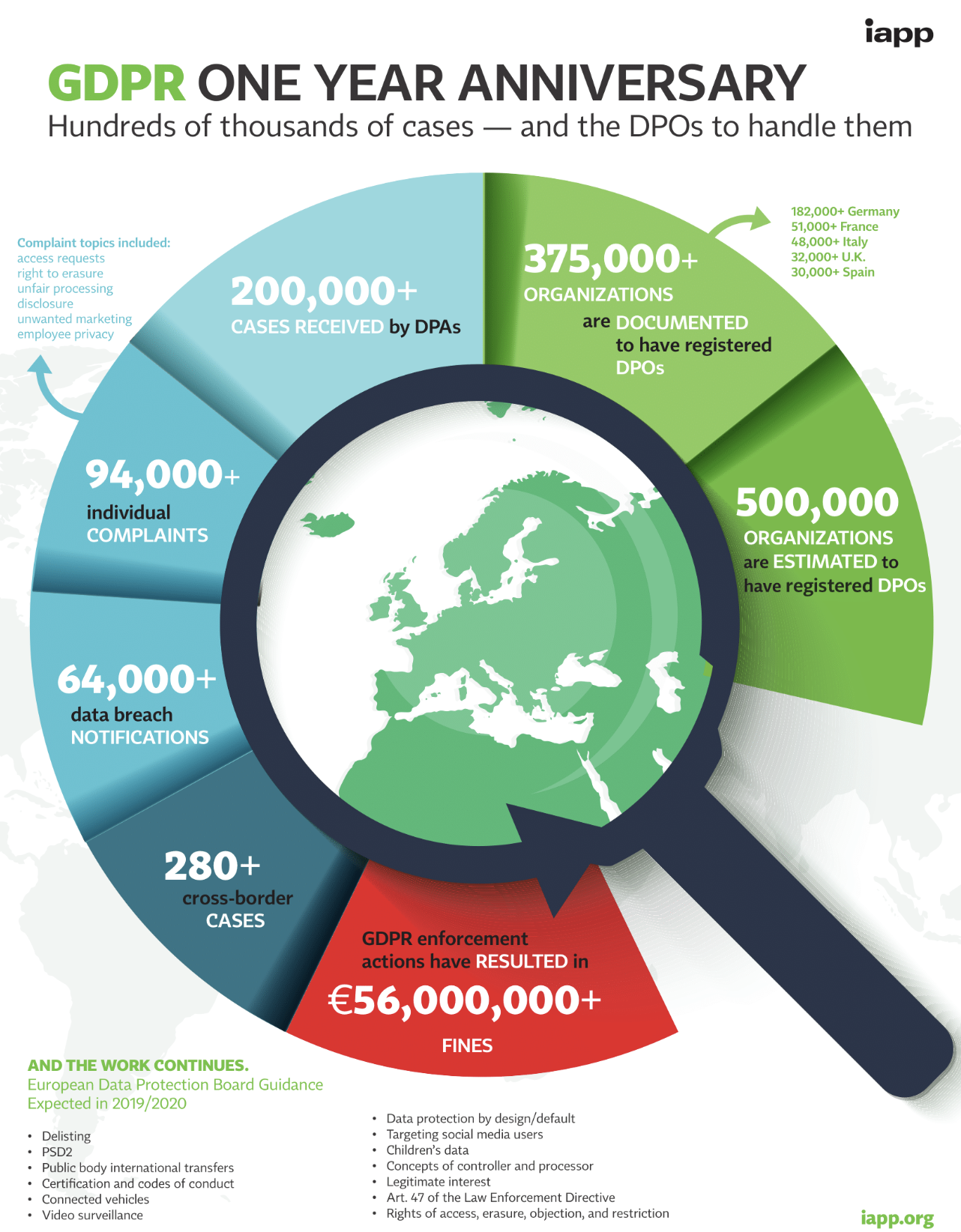

The EU General Data Protection Regulation (GDPR) has been in effect since May 25, 2018, It fundamentally changes the requirements for the processing of personal data and gives EU citizens significantly more control over their personal data – no matter where and how it is processed. Organizations around the world must respect certain guidelines on how to deal with the personal data of EU citizens. Anyone who does not fulfils their obligations risks a fine of up to four percent of the annual turnover achieved worldwide or 20 million euros. With that being said, still many the technical requirements of the EU General Data Protection Regulation seem difficult for companies to implement. An overview of what they are and how Customer Identity & Access Management (Customer-IAM or CIAM) paves the way to compliance.

The articles of the Basic Regulation essentially define how data is collected, stored, accessed, modified, transported, secured and deleted. So, in the age of digital change, companies must find the right balance between compliance with the legal requirements on the one hand and effective customer care on the other.

Not only they must give data subjects extended opportunities to have a say in what happens to their personal data. But also, the person responsible requires documented consent from the data subject for the collection, storage and use of the data. Thus, all personal data must be secured using appropriate technical and organisational measures, depending on the probability of occurrence and the severity of the risk. Here below are few main issues that companies are faced with on the road to compliance:

- Insufficient consent of the data subjects: The previously required basic level of consent to data use, including the opt-out procedure, is no longer sufficient under the provisions of the GDPR.

- Data silos: Personal data is often stored across multiple systems – for example for analysis, order management or CRM. This complicates compliance with GDPR requirements such as data access and portability.

- Lack of data governance: Data access processes must be enforced app by app via centralized data access policies. These policies are designed to give equal weight to consent, privacy preferences, and business needs.

- Poor application security: Customer personal data that is fragmented and unsecured at the data layer is vulnerable to data breaches.

- Limited self-service access: Customers must be able to manage their profiles and preferences themselves – across all channels and devices.

A robust Customer Identity & Access Management (Customer IAM or CIAM) solution are able to solve many of these seemingly insurmountable problems in no time.bThese solutions are able to synchronizes and consolidates data silos with tools such as real-time or scheduled bi-directional synchronization, the ability to map data schemas, support for multiple connection methods/protocols, and built-in redundancy, failover and load balancing.

CIAM solutions also facilitates the collection of consent across multiple channels and allows searching for specific attributes. Along with enabling mandatory enforcement of consent collection based on geographic, business, industry or other policies, they also offer the customer the opportunity to revoke their consent at any time. CIAM solutions give customers the ability to view, edit, and assert their preferences across channels and devices through pre-built user interfaces and APIs.

Most of the time these solutions include numerous centralized data-level security features, including data encryption in every state (at rest, in motion, and in use), access to recording restrictions, tamper-proof logging, active and passive alerts, integration with third-party monitoring tools, and more more.

In this way, a suitable CIAM solution helps to put many technical requirements of the GDPR into practice. And it even goes beyond the requirements of the basic regulation to create safe, convenient and personalized customer experiences – the basis for trust and loyalty.

Sources:

Règles pour les entreprises et les organisations