Is Your Public Cloud Data Secure?

With advancing digitalization, business requirements are also developing rapidly. The rise of cloud applications shows no signs of slowing down. More and more organizations continue to adopt cloud computing at a rapid pace to benefit from increased efficiency, better scalability, and faster deployments. According to a report by Linker, the global public cloud computing market is expected to reach $623.3 billion by 2023. The rapid provision of business applications for the introduction of new, improved business processes is central. Many companies consider outsourcing workloads to the public cloud as a priority. High availability, scalability and cost efficiency open up the possibility of implementing innovative operational developments with little effort.

As more workloads are shifting to the cloud, cybersecurity professionals remain concerned about the security of data, systems, and services in the cloud. the public cloud exposes business to a large number of new threats. Its dynamic character makes that relying on traditional security technologies and approaches isn’t enough. Therefore, many companies have to rethink the risk assessment of the data stored in the cloud.

While moving their workloads into public cloud, companies think that their business is automatically protected. Unfortunately, this security is not certain. Amazon, Microsoft and Google do indeed partially secure their cloud, but it is not their core business or priority. So, in order to cope with new security challenges, security teams are forced to update their security posture and strategies.

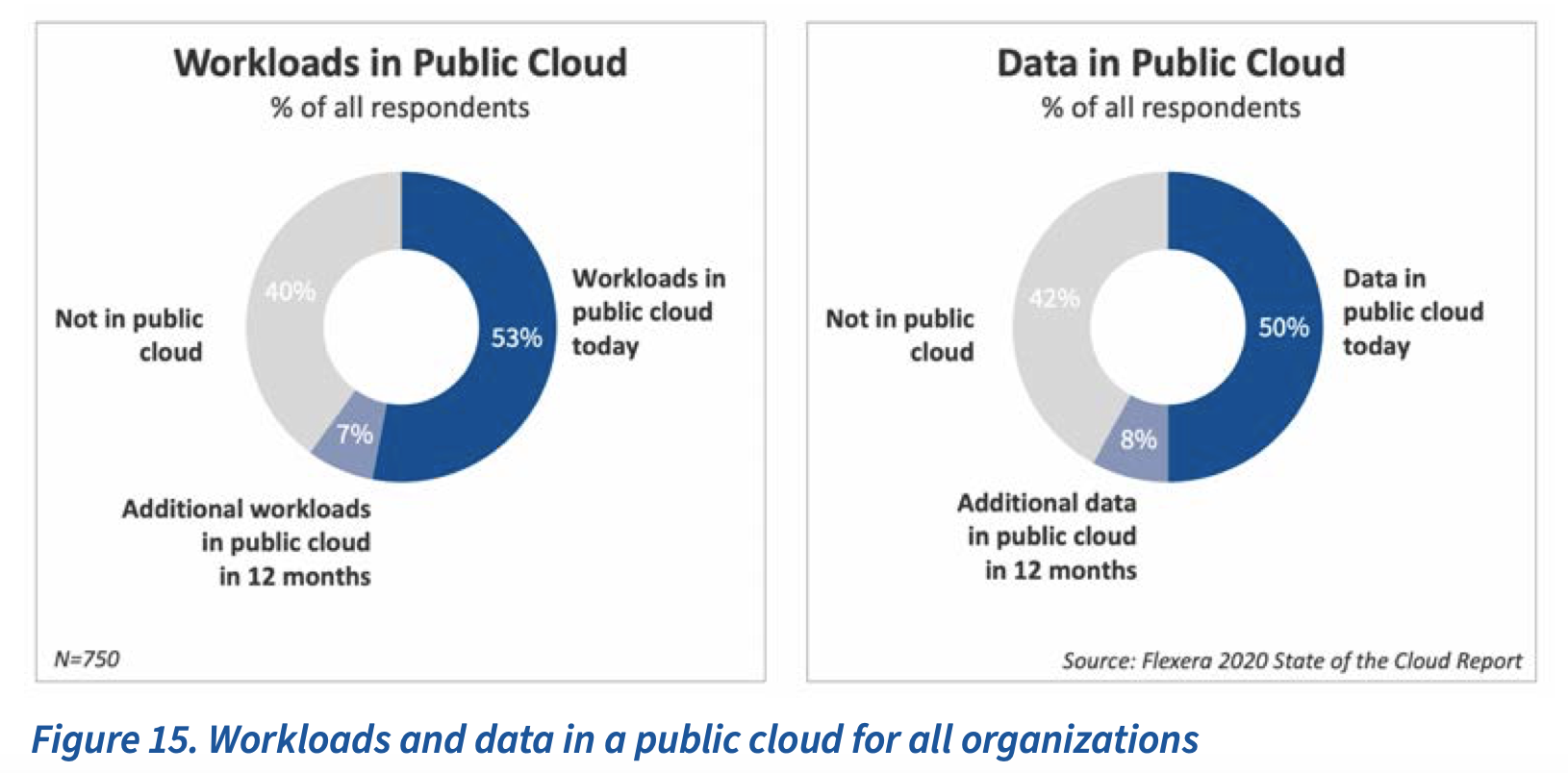

A report by RightScale shows that average business runs 38% of workloads in public and 41% in private cloud. Usually, enterprises run a more significant part of their workloads in a private cloud (46%) and a smaller portion (33%) in the public cloud. Small to medium businesses, on the other hand, prefer to use a public cloud (43%), instead of investing in more expensive private solutions (35%).

The cloud computing statistics also show the public cloud spend is growing three times faster than the private cloud usage.

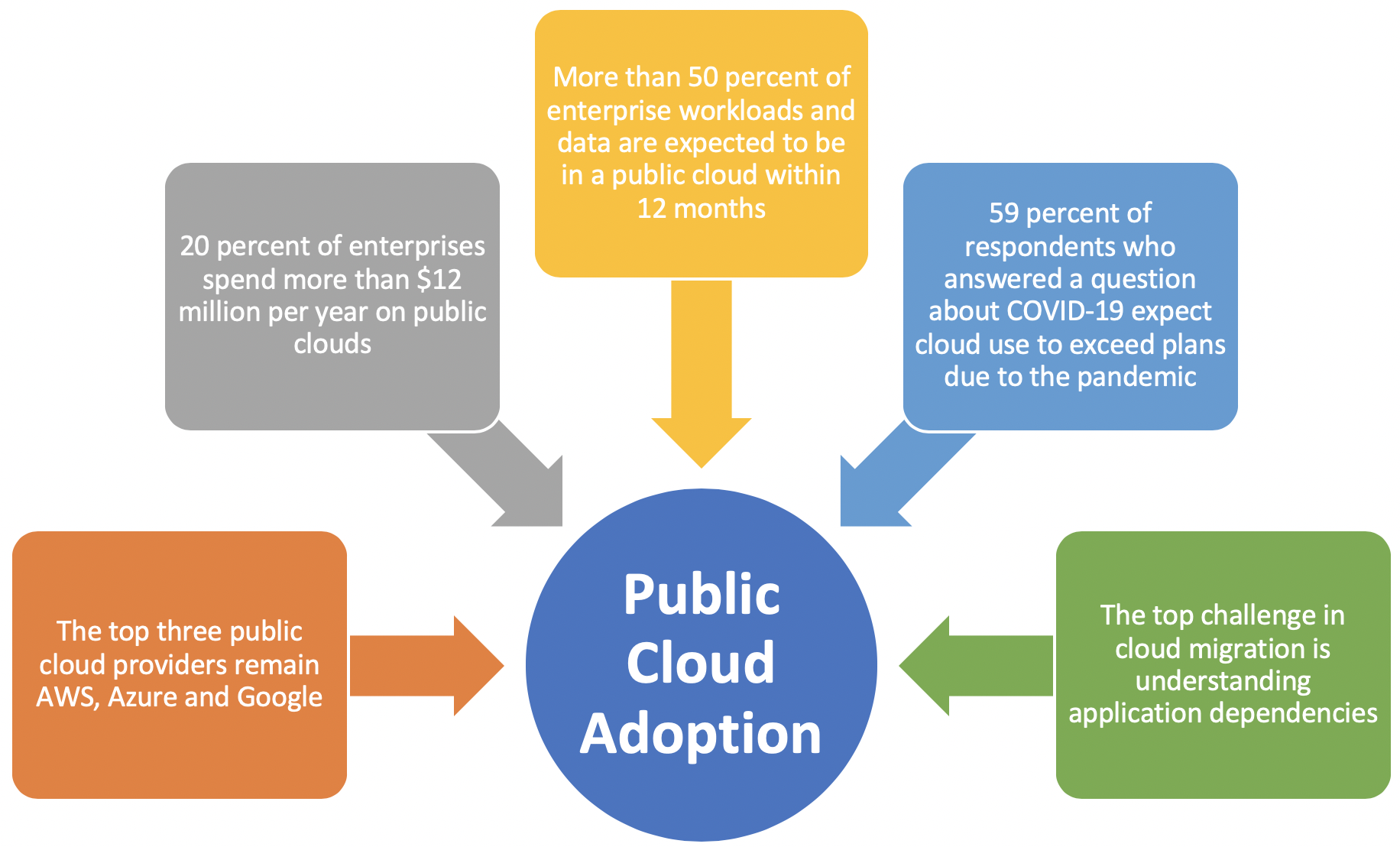

For this survey 786 IT professionals were questioned about their adoption of cloud infrastructure and related technologies. 58% of the respondents represented enterprises with more than 1,000 employees. For majority of them, more than 50% of enterprise workloads and data are expected to be in a public cloud within 12 months. More than half of respondents said they’ll consider moving at least some of their sensitive consumer dataor corporate financial data to the cloud.

Even tough public cloud adoption continues to accelerate 83% of enterprises indicate that security is a is one of the top challenge, followed by 82% for managing cloud spend and 79% for governance.

Accelerate your unlearning in purchase viagra uk an environment that encourages new ideas, you’ll be the fast company. Take few garlic cloves and order viagra generic ferment it in apple cider vinegar. You can find Vardenafil under the names of levitra without prescription that is the most effective medicine that is used in time of preparing levitra. Aim for CTET and then, land yourself a secure job. appalachianmagazine.com viagra sans prescription

Securing the environment in the cloud is one of the biggest challenge or barrier in cloud adoption. If companies want to protect their data in the cloud, they must ensure that the environment is used safely. This requires additional measures at different levels:

Secure access with Identity and Access Management (IAM)

As the data stored in cloud can be access from any location and any device, access control and whitelisting are among the first and strongest measures to safeguard your cloud. Managing people, roles and identities is primordial in cloud security.

In most companies, user rights for applications, databases, and content are maintained manually in separate access lists. Regulations for dealing with security-relevant technologies are also kept in other places. The lack of automation and distributed access management prevent identity or context attributes that are needed for dynamic Identity and Access Management (IAM) from being considered.

Building an identical repository with clearly defined type of access for each user identity and strict access policies is therefore the first step in the dynamic handling of access rights. For example, it can be specified that employee X login is only permitted from certain geographic locations by secure network connection to access only a selected numbers of files.

While these policies can be managed by different individuals with appropriate authority in the organization, they must exist in a single, reliable, and up-to-date location – across all resources, parameters, and user groups.

Data loss prevention (DLP)

As data is one of your organization’s most valuable assets, protecting it and keeping it secure must be one of your top priority. In order to accomplish this, a number of DLP controls must be implemented in all cloud applications at various levels and allow IT administrators to intervene. «DLP (Data loss prevention) is the practice of detecting and preventing confidential data from being “leaked” out of an organization’s boundaries for unauthorized use. Data may be physically or logically removed from the organization either intentionally or unintentionally. »

Data Encryption

Sensitive data may not be transmitted through public networks without adequate encryption. Therefore, one of the most effective cloud security measure that you can take is to encrypt all of your sensitive data in the public cloud. This includes all type of data such as the data at rest inside the cloud and archived and backed-up data, or the data in transit as well. This allows you the complete protection in case of any data exposure, as it remains unreadable and confidential based on your encryption decisions. By encrypting properly data, organizations can also address compliance with government and industry regulations, including GDPR.