Best Ways to Drive Corporate Growth in Uncertain Times

Are you feeling the pressure to drive corporate growth in these uncertain times? You’re not alone. The current business climate is rife with challenges and uncertainties, making it more important than ever for companies to find innovative ways to thrive. Let’s explore some of the best strategies for driving corporate growth during uncertain times. Whether it’s through diversification, innovation, and adaptation, or strategic partnerships and collaborations, there are plenty of avenues to explore!

The importance of driving corporate growth in uncertain times

In today’s ever-changing business landscape, driving corporate growth is crucial, especially during uncertain times. The ability to adapt and thrive in the face of uncertainty can determine the long-term success or failure of a company. Driving corporate growth allows businesses to stay ahead of their competitors. By continuously seeking opportunities for expansion and improvement, companies can gain a competitive edge that sets them apart from others in their industry.

Corporate growth helps create stability and resilience within an organization. In uncertain times, when market conditions are volatile and unpredictable, companies that have focused on growth strategies are better equipped to weather economic downturns and navigate through challenging circumstances.

Additionally, driving corporate growth fosters innovation. When companies actively seek new markets or develop new products/services, they stimulate creativity within their teams. This not only drives profitability but also enables organizations to remain relevant in changing customer demands. Furthermore, sustained growth leads to increased shareholder value. As a company expands its operations and generates higher profits over time, shareholders benefit from increased returns on their investments.

Strategies for driving growth during uncertain times:

- Diversification: One of the most effective strategies for driving corporate growth in uncertain times is diversification. By expanding into new markets or offering new products or services, companies can reduce their reliance on a single source of revenue and mitigate risks associated with economic uncertainty. Diversification allows businesses to tap into untouched customer segments, explore untapped opportunities, and capitalize on emerging trends.

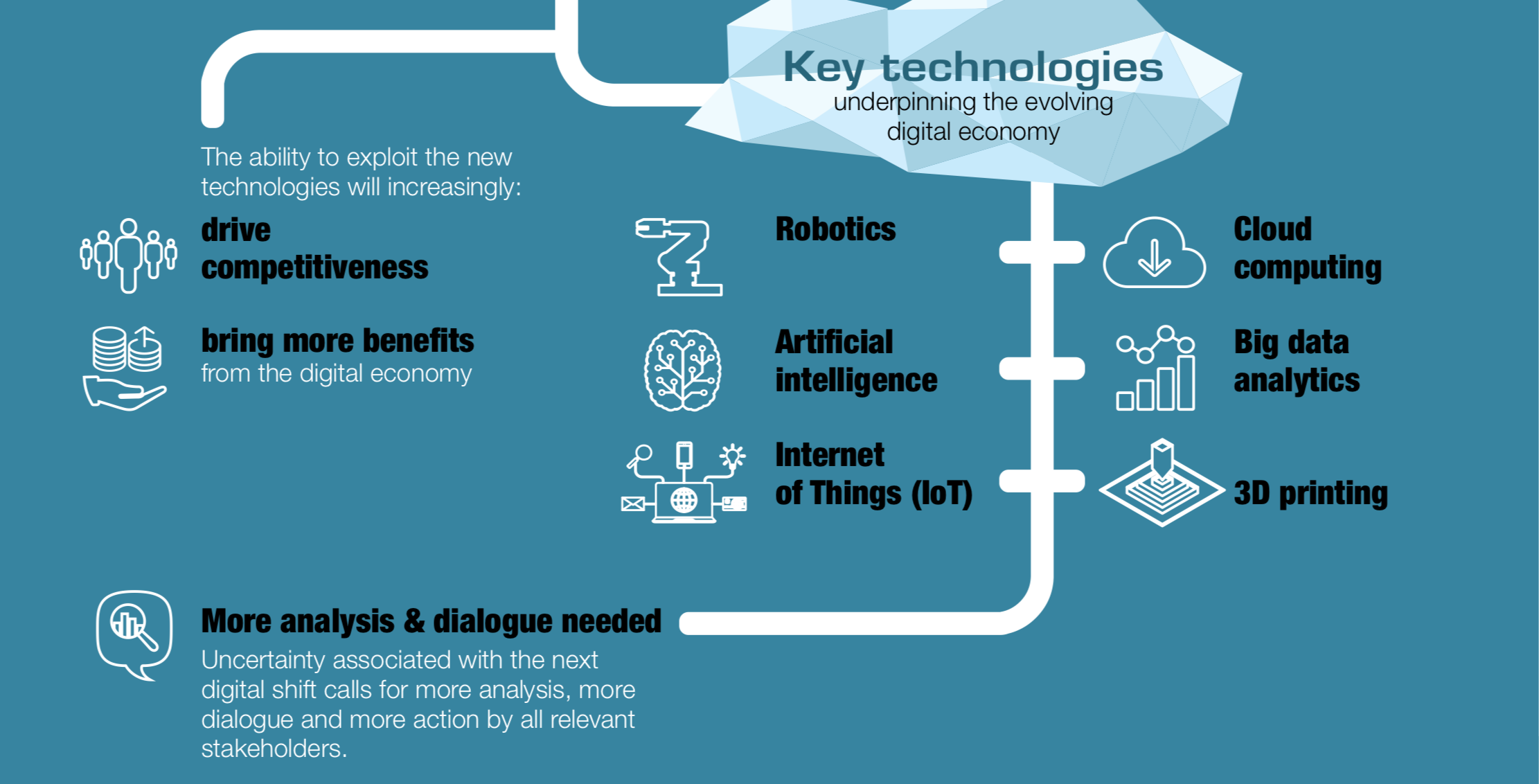

- Innovation and Adaptation: Another key strategy for driving growth during uncertain times is innovation and adaptation. Companies that are able to quickly identify changing customer needs and market dynamics can adjust their business models, products, or services accordingly. This may involve leveraging technology to streamline processes, developing new solutions tailored to current demands, or even completely pivoting the business model.

- Strategic partnerships and collaborations: Collaborating with strategic partners can be a powerful way to drive corporate growth in uncertain times. By joining forces with complementary businesses or industry leaders, companies can access new resources, expertise, distribution channels, and customer bases. Strategic partnerships also provide opportunities for shared knowledge transfer and mutual support during challenging times.

Case studies of companies that have successfully driven growth during uncertain times

Case studies of companies that have successfully driven growth during uncertain times serve as valuable sources of inspiration and guidance for businesses seeking to navigate through challenging economic landscapes. One such example is Amazon, which experienced significant growth during the 2008 global financial crisis. Instead of retreating, the company recognized the opportunity to expand its product offerings and capitalize on consumers’ increasing preference for online shopping. This strategic move not only helped Amazon maintain its position in the market but also propelled it towards becoming a dominant force in e-commerce.

Another notable case study is Netflix, which faced stiff competition from DVD rental stores when it first entered the market. However, instead of succumbing to industry norms, Netflix disrupted the traditional model by introducing a subscription-based streaming service. By focusing on innovation and adapting to changing consumer preferences, Netflix was able to drive substantial growth even during uncertain times.

In both these cases, diversification played a crucial role in driving corporate growth amidst uncertainty. These companies identified new opportunities within their respective industries and capitalized on them effectively. Additionally, they prioritized customer-centric strategies by constantly innovating and adapting their business models according to evolving consumer needs.

Strategic partnerships and collaborations are another key driver of growth during uncertain times. Take Uber’s partnership with Spotify as an example – this collaboration allowed Uber riders to personalize their trip experience with music while simultaneously providing Spotify access to millions of potential subscribers. By leveraging each other’s strengths and reaching new audiences together, both companies were able to achieve sustained growth even in turbulent times.

These case studies demonstrate that successful corporate growth during uncertain times requires visionary leadership that embraces change rather than shying away from it. It demands an agile mindset that can identify opportunities amidst challenges while remaining focused on delivering value to customers.

By studying these success stories closely, businesses can gain insights into effective strategies for driving corporate growth amid uncertainty – whether it be through diversification efforts or innovative partnerships – ultimately helping them thrive despite unpredictable circumstances

Growth doesn’t happen overnight; it requires ongoing effort. Remember that every company’s path to success will differ based on its unique circumstances. Therefore it’s important to have a well-defined strategy that requires continuous evaluation.

2018 is all about the further rapprochement of man and machine. Dell Technologies predicts the key IT trends for 2018. Driven by technologies such as Artificial Intelligence, Virtual and Augmented Reality and the Internet of Things, the deepening of cooperation between man and machine will drive positively the digitization of companies. The following trends will and are shaping 2018:

2018 is all about the further rapprochement of man and machine. Dell Technologies predicts the key IT trends for 2018. Driven by technologies such as Artificial Intelligence, Virtual and Augmented Reality and the Internet of Things, the deepening of cooperation between man and machine will drive positively the digitization of companies. The following trends will and are shaping 2018: