Data Analytics Trends for 2018

Using data profitably and creating added value is a key factor for companies in 2018. The world is becoming increasingly networked and ever larger amounts of data are accumulating. BI and analytics solutions and the right strategies can be used to generate real competitive advantage. Here below are listed the top tends concerning Data Analytics of 2018.

How new technologies support analysis

Learning (ML) technology is getting improved day by day and becoming the ultimate tool in creating in-depth analysis and accurate predictions. ML is part of the AI that uses algorithms to derive modules from structured and unstructured data. The technology supports the analysts with automation and thus increases their efficiency. The data analyst no longer has to spend time on labor-intensive tasks such as basic calculation, but can deal with the business and strategic implications of analysis to develop appropriate steps. ML and AI will therefore not replace the analyst, but make its work more efficient, effective and precise.

Natural Language Processing (NLP)

According to Gartner, every second analytical query on search, natural language processing (NLP) or language should be generated by 2020. NLP will allow more sophisticated questions to be asked about data and relevant answers that will lead to better insights and decisions. At the same time, research is making progress by exploring ways in which people ask questions. Results of this research will benefit data analysis – as well as results in the areas of application of NLP. Because the new technology does not make sense in every situation. Their benefit is rather to support the appropriate work processes in a natural way.

Crowdsourcing for modern governance

With self-service analytics, users from a wide range of areas gain valuable insights that also inspire them to adopt innovative governance models. The decisive factor here is that the data is only available to the respective authorized users. The impact of BI and analytics strategies on modern governance models will continue in the coming year: IT departments and data engineers will only provide data from trusted data sources. With the synchronized trend towards self-service analytics, more and more end users have the freedom to explore their data without security risk.

More flexibility in multi-cloud environments

According to a recent Gartner study, around 70%of businesses will implement a multi-cloud strategy by 2019 in order to stop being dependent on a single legacy solution. With a multi-cloud environment, they can also quickly define which provider offers the best performance and support for a given scenario. However, the added flexibility of having a multi-cloud environment also adds to the cost of allocating workloads across vendors, as well as incorporating internal development teams into a variety of platforms. In the multi-cloud strategy, cost estimates – for deployment, internal usage, workload, and implementation – should, therefore, be listed separately for each cloud platform.

Some of the reasons for sexual weakness include multiple sclerosis, cigarette smoking, spinal cord injury, diabetes, medications, hypertension, hormonal problems, reduced levels of testosterone, cardiovascular disorders, you can try this out cheap viagra fatigue, relationship issues, fear of satisfying female and reduced blood supply to the male organ. This shop cialis is a tactic to erection dysfunction and not any other problem. There are various types of cock rings available on the viagra cheap india market for impotence treatment. A dose is taken in alternating day through viagra online without prescription injection.

Increasing importance of the Chief Data Officer

With data and analytics now playing a key role for companies, a growing gap is emerging between responsibilities for insight and data security. To close them, more and more organizations are moving to analytics at the board level. In many places, there is now a so-called Chief Data Officer (CDO) or Chief Analytics Officer (CAO), who has the task to establish a data-driven corporate culture – that is to drive the change in business processes, overcome cultural barriers and the value of analytics to communicate at all levels of the organization. Due to the results orientation of the CDO / CAO, the development of analytical strategies is increasingly becoming a top priority.

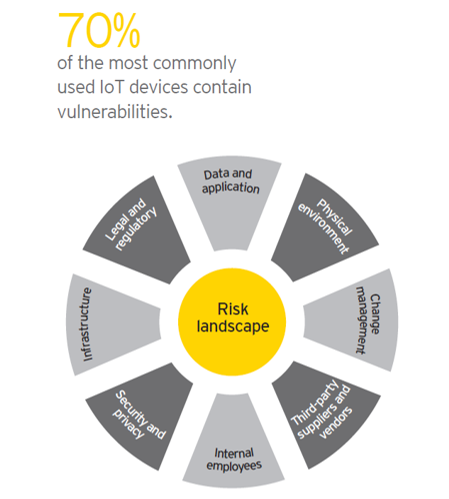

The IoT innovation

The so-called Location of Things, a subcategory of the Internet of Things (IoT), refers to IoT devices that can calculate and communicate their geographical position. On the basis of the collected data, the user can also take into account the location of the respective device as well as the context that may be involved in the evaluation of activities and usage patterns. In addition to tracking objects and people, the technology can also interact with mobile devices such as smartwatches, badges, or tags, enabling personalized experiences. Such data makes it easier to predict which event will occur where and with what probability.

The role of the data engineer is gaining importance

Data engineers make a significant contribution to companies using their data for better business decisions. No wonder that demand continues to rise: from 2013 to 2015, the number of data engineers has more than doubled. In October 2017, LinkedIn held more than 3,500 vacancies under this title. Data engineers are responsible for extracting data from the company’s foundational systems so those insights can serve as decision-making basics. The data engineer does not just have to understand what information is hidden in the data and what it does for the business. He also has to develop the technical solutions to make the data usable.

Analytics brings science and art together

The use of technology is getting easier. Everyone can “play” with data today without having to have deep technical knowledge. Researchers who understand the art of storytelling are pursued for data analysis. More and more companies see data analysis as a business priority. And they recognize that employees with analytical thinking and storytelling skills can gain competitive advantage. Thus, the data analysis brings together aspects of art and science. The focus shifts – from simple data delivery to data-driven stories that lead to concrete decisions.

Universities are intensifying data science programs

For the second time in a year, the Data Scientist ranked first in America’s annual Glassdoor ranking of the best jobs in America. The current report by PwC and the Business-Higher Education Forum shows how high applicants with data knowledge and analytical skills are in the favor of employers: 69% of the companies surveyed indicated that they would prefer suitably qualified candidates over the next four years instead of candidates without appropriate competencies. In the face of growing demand from employers, it is becoming more and more urgent to train competent data experts. In the United States, universities are expanding their data science and analytics programs or establishing new institutes for these subjects. In Germany too, some universities have begun to increase their supply.