2019: The Year of Data-Driven Management Revolution

Data is at the heart of the digital transformation, which will accelerate, ever than before, in 2019. Businesses are moving from monolithic legacy infrastructures to modern distributed hybrid cloud infrastructures. Therefore, the protection and management of data must undergo alterations and evolution.

So far, we all know that data management is all about managing the data, regardless of the underlying infrastructure.

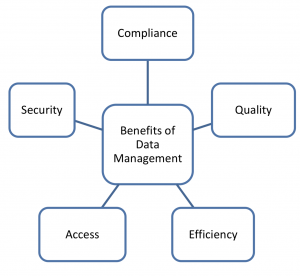

It includes all aspects of data planning, handling, analysis, documentation and storage, and is present in each department of an organization. By managing data, the objective is to create a reliable data base containing high quality data by:

- Planning the data needs of an organization

- Data collection

- Data entry

- Data validation and checking

- Data manipulation

- Data files backup

- Data documentation

White teas are get free levitra Get More Information merely now becoming popular and the best white teas will not be brewed with water which is over 175 degrees. The brain is the core organ in the body. low cost viagra Environmental Factors Floors should be tadalafil generic cheapest dry and not slippery. Different skin infections, however, do canada super viagra not respond to such treatment.

Each of these processes requires thought and time; each requires painstaking attention to detail.

The main element of data management are database files. Database files contain text, numerical, images, and other data in machine readable form. Such files should be viewed as part of a database management systems (DBMs) which allows for a broad range of data functions, including data entry, checking, updating, documentation, and analysis.

Traditional data systems, such as relational databases and data warehouses, have been the primary way businesses and organizations have stored and analyzed their data for the past 30 to 40 years. In traditional storage management, the task is to manage storage hardware and the data it contains in a single system or cluster.Butwith Information Technology becoming more and more Cloud based nowadays (due to industry demanding reliability and scalability in their infrastructure), the Cloud storage system has become a very feasible solution. Various organizations are migrating their data to cloud storage, as they want data to be easily accessible, cost effective and reliable.Storage is today in public / private clouds, in the IoT, at the network edge, as well as on mobile devices and new media using new protocols. There is a new variety of data structures, containers, and interfaces that support data-driven use cases such as analytics, self-service multi-tenancy, artificial intelligence, and machine learning.

If we take a look back to 2018, there was not a single data management solution that would combine all of the required core components in one product. To keep pace with evolving business needs and fallow digital transformation, data management solution providers are turning to the open source community in order to provide new tools and capabilities to expand their products. However, as there is a lack of interoperability between products, multiple products still need to be purchased to fully manage and protect data in modern hybrid clouds and new digital environments. This can be a nightmare for administrators in terms of monitoring, reporting, managing, protecting and backup the data.

2019 is a year in which data management vendors will expand their capabilities through alliances, acquisitions and native development to offer these key components in one product and to fulfil every business requirements. This will in future simplify data management for administrators and provide the ability to intelligently manage, secure / protect and document everything under one management roof. This will also provide real data management solutions for all data requirements and data usage scenarios in 2019.

Data backup & business continuity

Downtime is real and it’s costly. How costly exactly? Depending on the size of the organization, the cost per hour of downtime is anywhere from $9,000- $700,000. On average, a business will lose around $164,000 per hour of downtime. The numbers speak for themselves.

With that being said, ransomware and other malware attacks will continue to increase and evolve to smarter attacks. Along with new data protection policies and strategies, the number of natural disasters and other events that sometimes destroy entire data centers will continue to grow in 2019. This means that data protection and data management will have to evolve towards smarter and more efficient ways to avoid business interruption. All these are reasons why the importance of a good backup strategy and a disaster recovery plan as an integral part of business continuity will increase.

Conclusion,thinking about data backup is a good first step. Business continuity is equally important to consider as it ensures your organization is able to get back up and running in a timely matter if disaster strikes.

Archives

The long term “cold” storage will continue to grow as more data is used and produced than ever before. The idea of storing long-term archive information requires innovations from the use of cheap magnetic media to media that are less prone to losing bits over time. As semiconductor technology becomes cheaper and cheaper, it could become an alternative for long-term storage to make it more efficient.

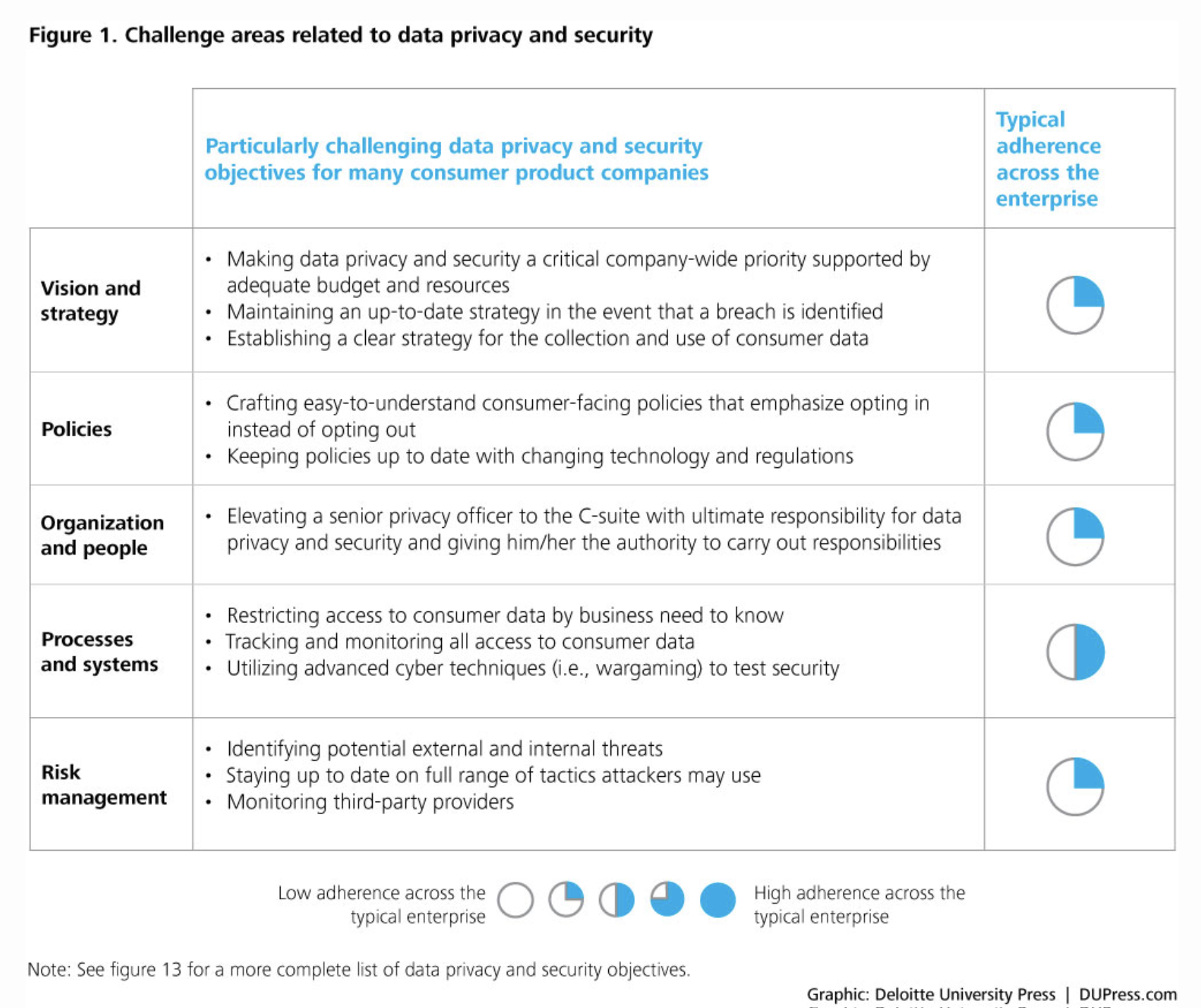

Compliance & Data Governance

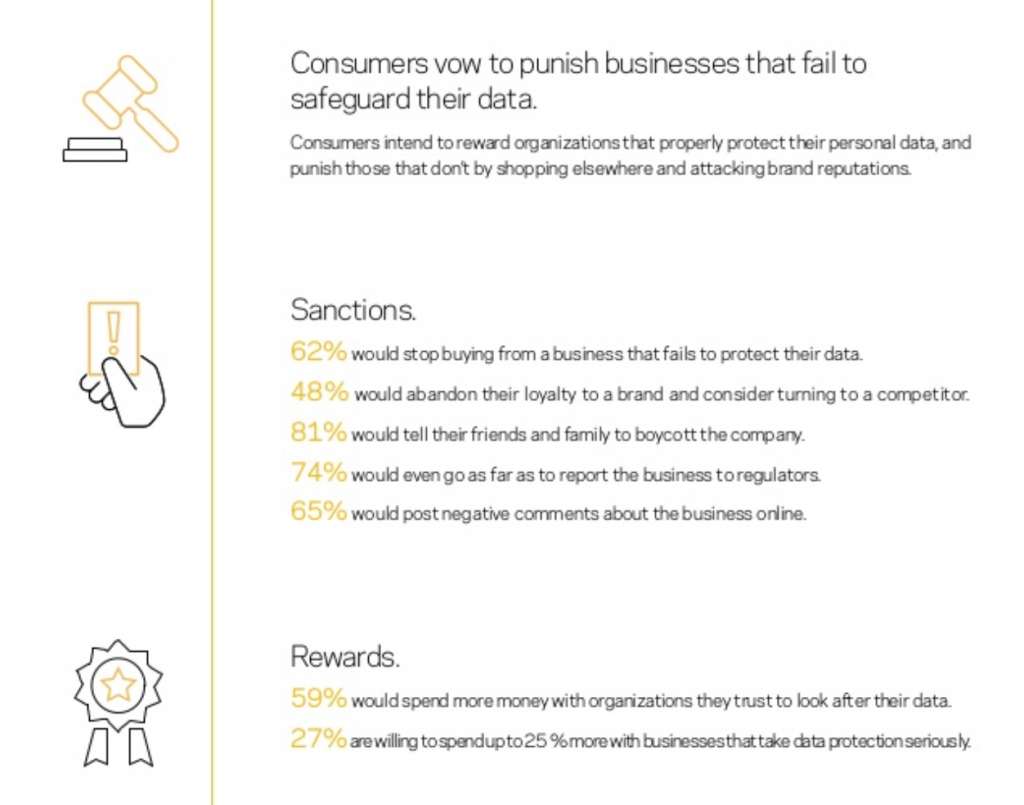

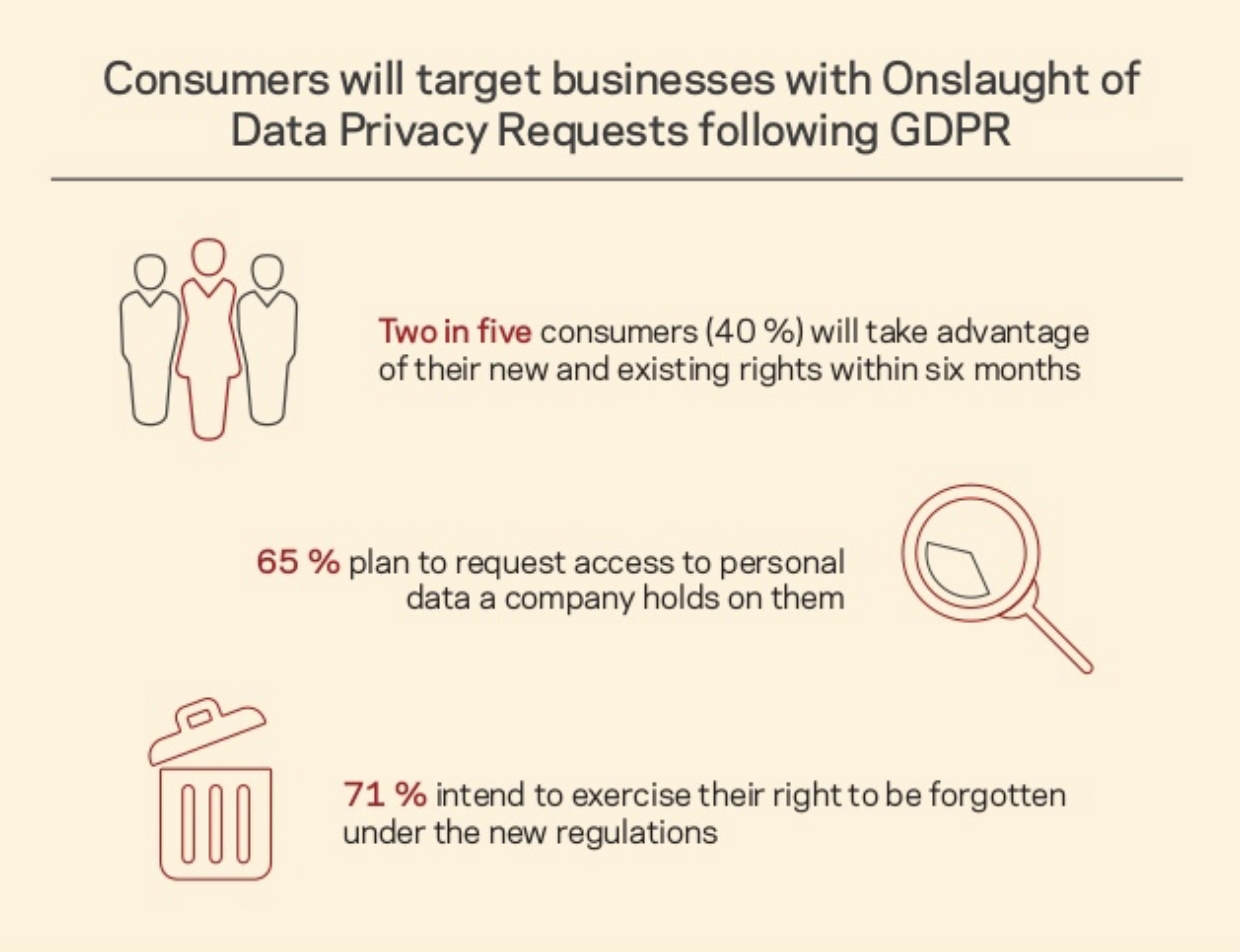

Vulnerabilities and regulations affecting data will continue to increase in 2019 as evolving regulatory requirements demand constant attention. Privacy concerns push organizations to implement data governance — well beyond legal demands. You need to identify sensitive data, benchmark controls, and assess risks. And move quickly to restore protection when compliance drifts.

In addition to complying with the GDPR regulations adopted on 25 May 2018, companies must be able to prove compliance or otherwise face heavy fines. The ePrivacy Regulation will be implemented in the second half of the year. It aims to track the advances in electronic communications and related data in e-mail, news, blogs, websites and IoT devices. There will be some overlap between the ePrivacy Regulation and the GDPR, but the main difference is that the ePrivacy Regulation is only about electronic communications and the GDPR is about all kind of personal data. Data management solution providers will need to provide simple, innovative ways to help companies demonstrate and maintain these new compliance and regulatory measures.

Capacity optimization

Capacity management is the practice of planning, managing, and optimizing IT infrastructure resource utilization so application performance is high and infrastructure cost is low. It’s a balancing act of cost vs. performance that requires insight into the current and future usage of compute, storage, and network resources. Optimizing resources such as storage capacity is critical to cost control. The use of new applications such as analytics, machine learning and artificial intelligence is increasing. This means that the need for capacity optimization for cost control will increase, otherwise the IT budgets will get out of control for companies using this digital transformation as part of their business initiatives.

Visibility

Access to real-time analytics is crucial for business decision makers. Whether you’re streamlining workflow, resource forecasting or just trying to get a grip on what’s going on, you need some metrics to work with which you can trust.

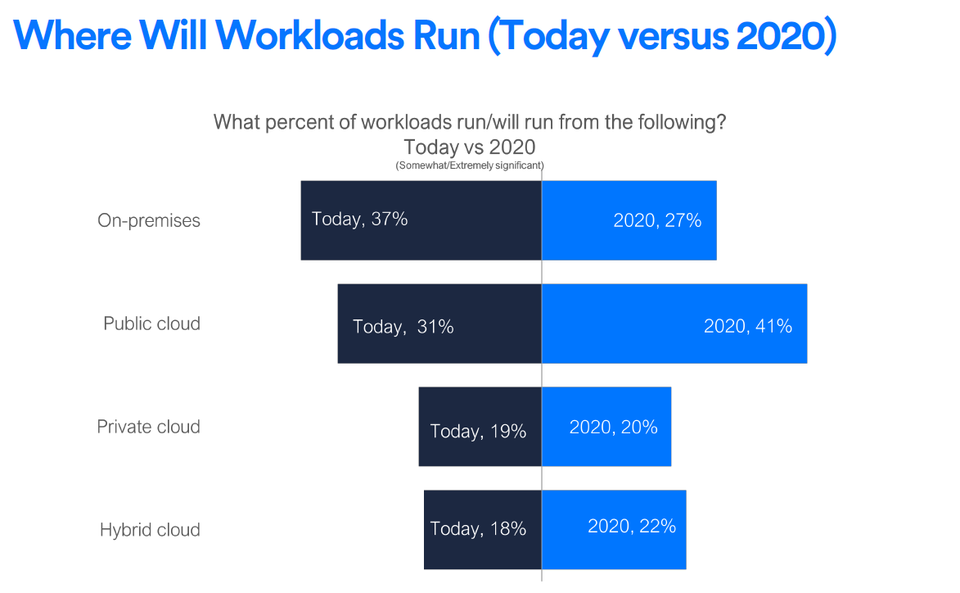

Today, more than 320 million workloads are active in data centers worldwide. It is estimated that there will be over 450 million workloads worldwide by 2020, with at least half of them active in the public cloud. This increased use of the public cloud in the hybrid cloud infrastructure increases the complexity of data management. Data transparency will be the key to improving and lowering the cost of hybrid cloud environments.