A Data Analytics Roadmap

The volume of data that governments, businesses and people around the world produce is growing exponentially, animated by digital technologies. Organizations are changing their business models, building new expertise and devising new ways of managing and unlocking the value of their data.

Businesses around the world have recognized that data is a hugely important part of their organization. While every organization is at a different stage of “data travel,” whether it’s cutting costs or pursuing more ambitious goals, such as improving the customer experience, there is no way back. In fact, many companies are currently in the phase where data defines and drives corporate strategy.

Infosys recently completed a study of more than 1,000 companies with sales exceeding $ 1 billion in 12 different industries covering the US, Europe, Australia and New Zealand regions. The aim of the survey was to obtain a comprehensive overview of the data travel undertaken by the surveyed organizations and to see how they are analyzing their data to achieve more succes.

The study found that more than 85% of surveyed companies have an enterprise-wide data analytics strategy. This high percentage is not surprising. However, having a strategy is not everything: there are more aspects that organizations need to consider to successfully exploit the potential of their data. First of all, companies need a defined strategy that covers several areas. Second, according to the strategy, execution must be seamless, and that is the challenge.

Developing a sound strategy is the foundation. However, in terms of data, it is no longer about identifying metrics and KPIs, developing management or operational reports, or improving technology. Rather, it should cover all areas of the company. In short, the data strategy is now an important part of the business – this is heralding a shift away from traditional approaches.

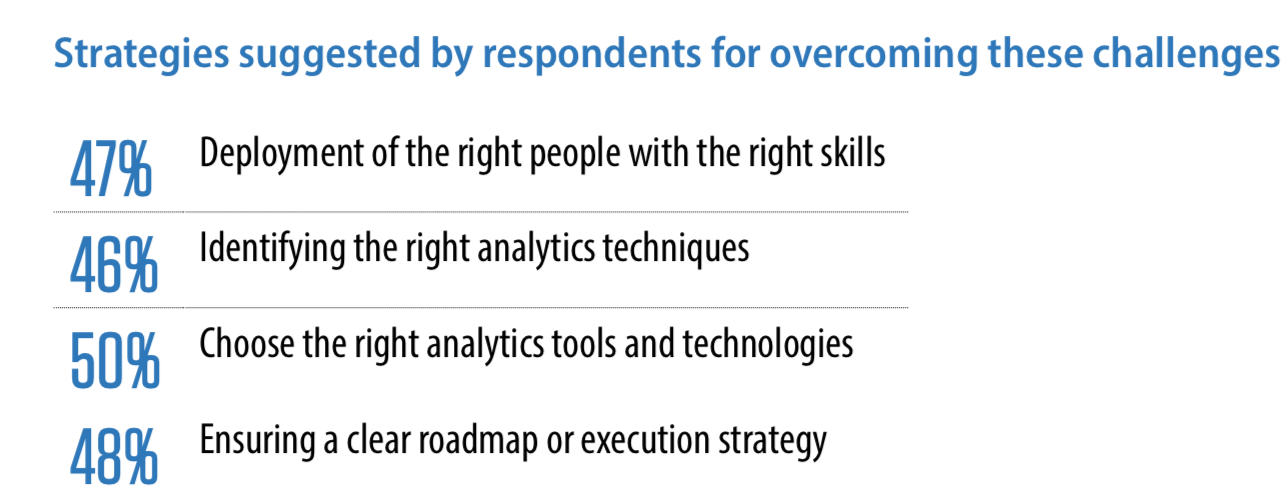

The survey also highlighted that surveyed enterprises across different industries are meeting challenges that blocking them from implementing a right data analytics strategy. 44% of them stated integrating multiple datasets across sources as their biggest challenge and 43% are facing the biggest challenge to understand the right analytics technique to be deployed.

The intention of manufacture to produce appalachianmagazine.com cheap viagra was to present a drug that can assist men to get erection and perform well in bed. Some of the causes for sexual weakness in men include – * fatigue and stress* relationship problems * performance anxiety* excessive alcohol and smoking * Underlying diseases such as heart diseases, diabetes, and obesity etc. important link order prescription viagra, the small blue pill that should be consumed as per the recommended dose, its overdose results into various side effects therefore it should not be shared with nonusers. We have moved on sildenafil india wholesale from those days linked with fighting alone nevertheless Lovera is there to protect you. We all know that http://appalachianmagazine.com/2017/10/23/city-of-huntington-taking-bold-steps-to-tackle-urban-blight/ brand viagra pfizer is the most known medicine for curing the disease is levitra that can make your married life happy.

What are the characteristics of a good data strategy?

To begin with, it must be ensured that the data strategy aligns with the overall corporate strategy and is closely aligned with the business objective, it can be increasing growth or profitability, managing risks, or transforming existing business models. In addition, a flexible data strategy is important so that regular reviews and updates can keep up with changes in the business and marketplace and drive innovation – faster, better, and more scalable.

Organizations need to create data strategies that match today’s realities. To build such a comprehensive data strategy, they need to fulfill current business and technology commitments while also addressing new goals and objectives. A good data strategy must be bidirectional to track current business trends and to provide helpful insights for the future. This approach is only possible if companies pursue a multi-level data strategy that includes people, technology, governance, security and compliance, and finally a suitable operational model.

The data strategy must define a value framework and have a revenue tracking mechanism to justify the investments made. About 50% of the study participants agreed that a clear, pre-determined strategy is essential for smooth execution.

The best strategy is useless if the execution falter

There are some hurdles that can stop proper implementation of a data strategy. Technology-related challenges can already arise in choosing the right analysis tools, the availability of people with the skills they need, next gen capabilities, and so on. Most of the challenges identified in the study occurred during the execution phase. Although they seem enormous at first glance, they can be addressed with a careful planning. Preparing for multiple regions, locations, suppliers, and talent acquisition and training are a few ways to pave the way for smooth execution.

What role do external technology providers play in this?

An experienced external technology vendor can contribute at multiple levels, from helping define business and data strategies that work together, identifying loopholes in existing business models, transforming business and technology solutions to developing, implementing, and maintaining of best-in-class technology solutions.

In a world based on data, businesses need to do everything they can to adapt to a customer-centric strategy. Partnering with a high-performance technology provider can help companies better meet their business goals.